I designed and shipped a guided AI sourcing workflow that helps brands turn early ideas into supplier ready briefs and outreach. The goal was simple: get suppliers to respond faster and help more sourcing conversations turn into real orders.

230+ product briefs created

140 invoices generated; ~50% conversion to paid

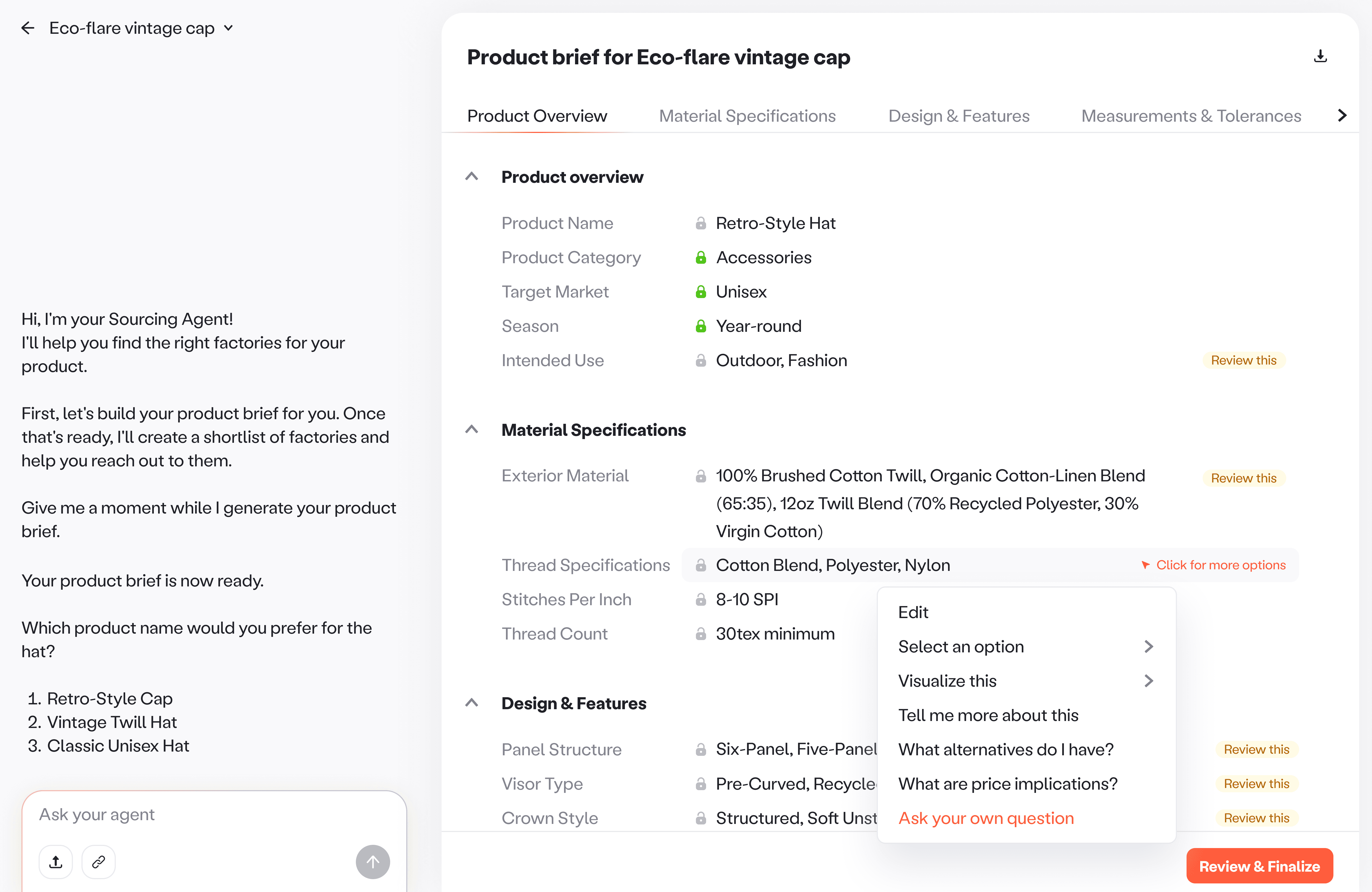

Product brief interations

Context. Pietra offers a large network of vetted factories, which attracts many long tail users. Many of these brands reach out to suppliers while they are still in the ideation phase and figuring out what to make.

Problem. Those first messages often miss the specs and constraints suppliers need to act on. Suppliers either do not respond, or the conversation turns into endless follow ups to gather basic info. Either way, sourcing stalls and fewer conversations move to quotes, samples, and invoices.

Who this affects. Long-tail users in the ideation stage. Suppliers who decide whether to respond, quote, and move to sampling. Pietra, because marketplace conversion drives revenue.

Hi, I want to manufacture hoodies. Oversized fit, premium quality. What would it cost?

Thanks. Do you have a product brief with

Fabric type

Weight or GSM

Colors

Quantity

Target unit cost

Timeline

Not sure about GSM. Probably cotton or something soft. Quantity maybe 200 to start.

Is this men or women? Any reference images?

Both. I’m still figuring things out. Just want to see what’s possible.

Supplier has ended the conversation

Without clear requirements to act on, the supplier had to keep asking basics and eventually dropped the conversation.

Process. I reviewed past brand-supplier conversations and looked for patterns in what led to quick quotes versus stalled threads. I also ran reviews with the sourcing team to validate what suppliers actually need.

Insight. Suppliers need clear specs to quote confidently. Long-tails users do not know the right sourcing vocabulary or what details matter. When requirements were captured in a product brief, the conversation had momentum and suppliers could quote sooner.

kith inspired hats

pre-wash treatment

cotton twill

fashionable urban design

500 MOQ

Product brief for Urban comfort twill cap

General Information

Category

Caps, Hats, Headwear

Target Market

Urban fashion enthusiasts

Material Specifications

Fabric Material

100% Combed-Cotton Twill

Panel Setup

Six evenly shaped sections

Pre-wash

Softness

Production & Quality

Timeline

30-45 days

Sampling Costs

$75 per sample

MOQ

500 Units

A product brief is the bridge between ideation and manufacturing. It acts as the source of truth for the request, what it is, how it should be made, and what the brand needs on MOQ, sample cost, and timing.

Leveraging AI. AI has become increasingly good at turning messy, unstructured input into clear, structured information.We used it as a guide that helps brands flesh out early ideas, learn what suppliers need, and turn that input into artifacts suppliers can act on. The goal was not automation for its own sake. It was education and speed, with transparency and user control.

Grounded in Pietra’s sourcing knowledge. Pietra sits at the center of a trusted supplier network and has deep knowledge of what factories need to evaluate a project. Over time, this created thousands of data points across categories, materials, pricing patterns, minimum order quantities, and production constraints. We used that knowledge to guide what the agent asks, how it fills gaps, and what it recommends.

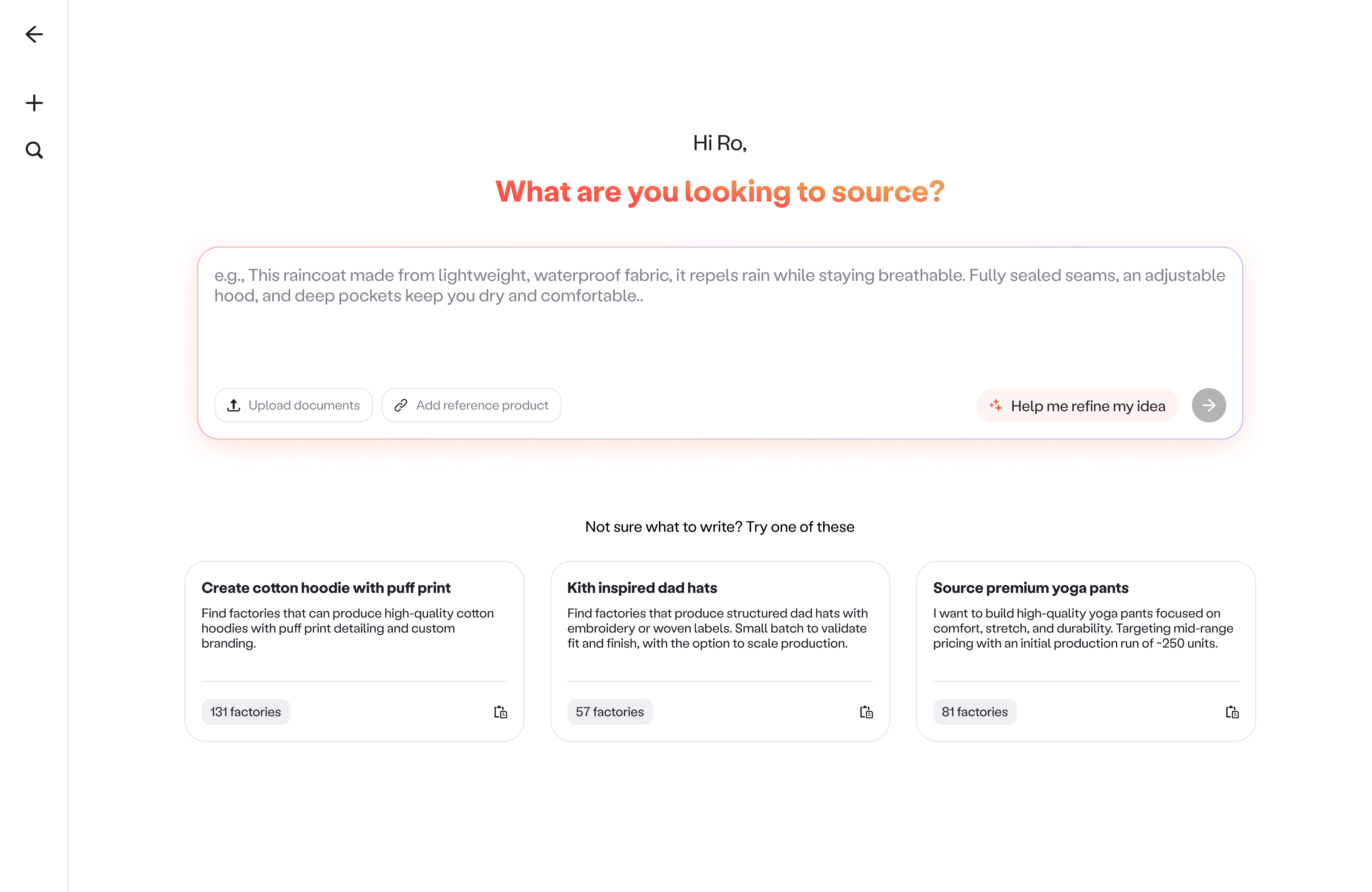

Design to support uncertainty, not punish it. The intake experience was designed to meet users where they were in their thinking, with simple examples and optional guidance.

30% of users who saw the input screen progressed to generating a product brief. This was a strong signal given that sourcing is not a frequent, everyday task and many users were exploring the feature out of curiosity at launch.

Step 1 - Input screen

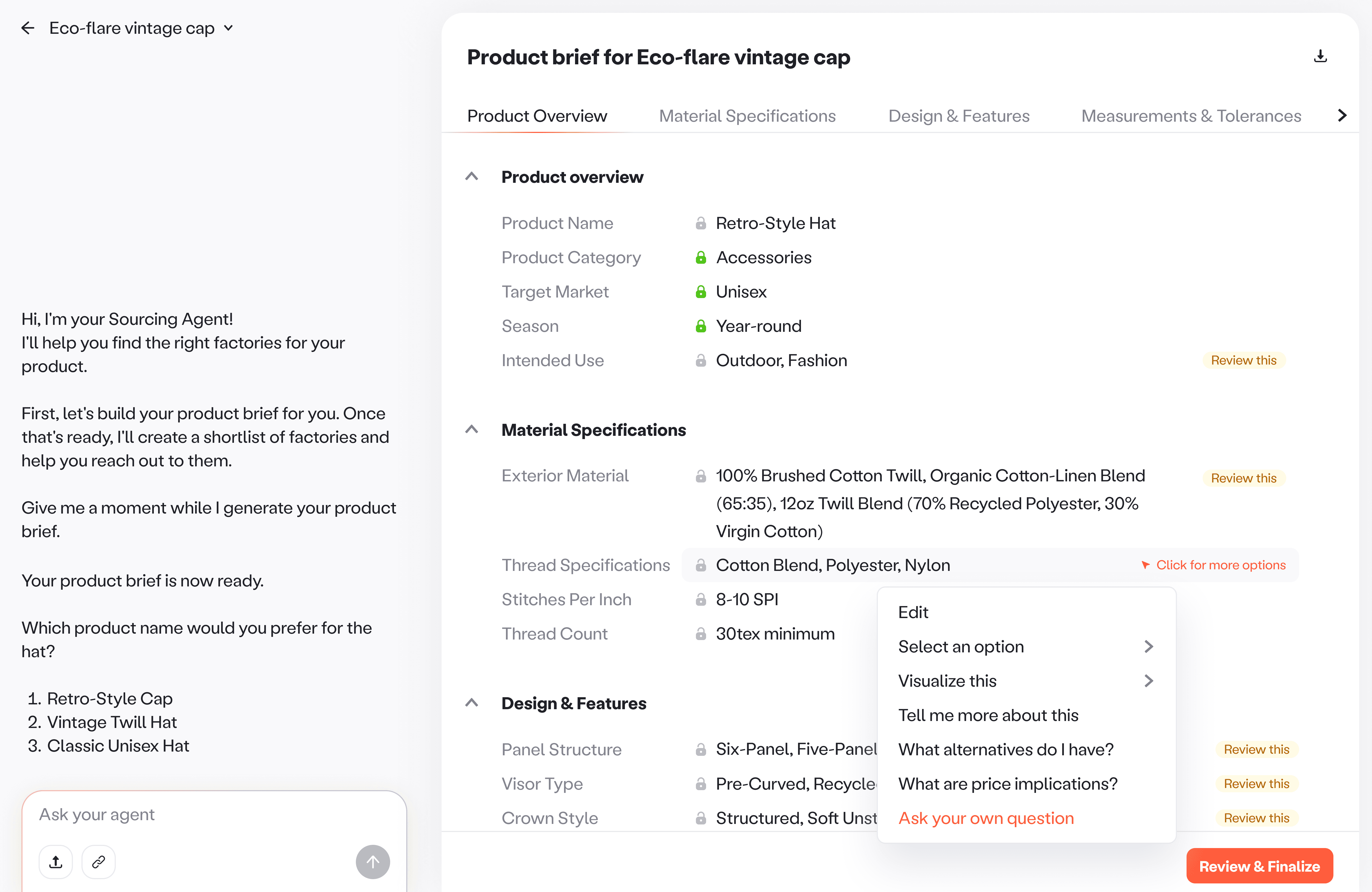

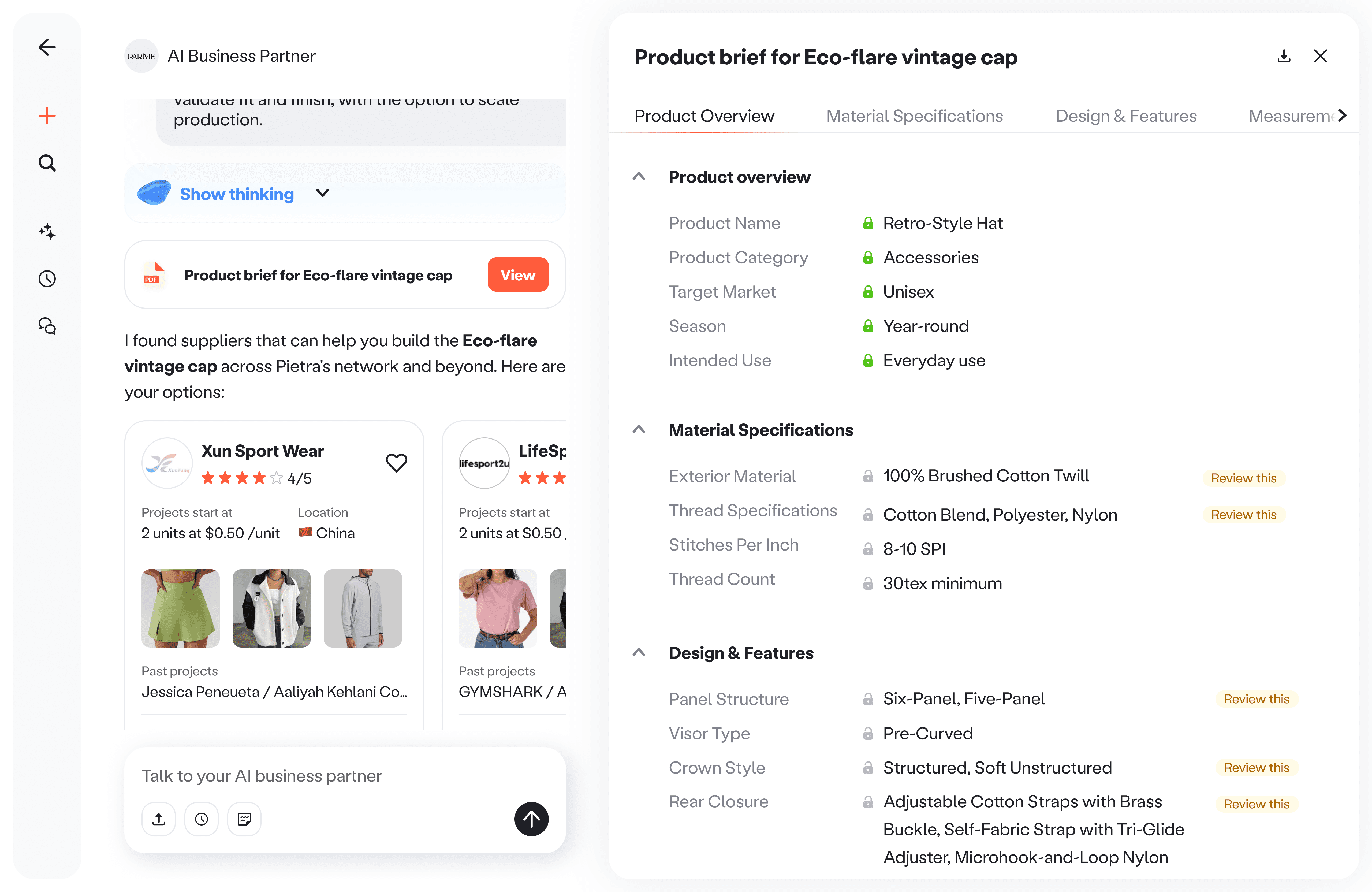

Product brief as the core artifact. User input becomes a structured product brief that captures specs plus commercial constraints. Because it directly impacts response rates and sourcing outcomes, it was designed as the primary workspace, always visible, editable, and supported by AI guidance.

55% of users who created a product brief moved on to factory recs. This indicated that the experience successfully carried momentum forward.

Step 2 - AI Product brief canvas

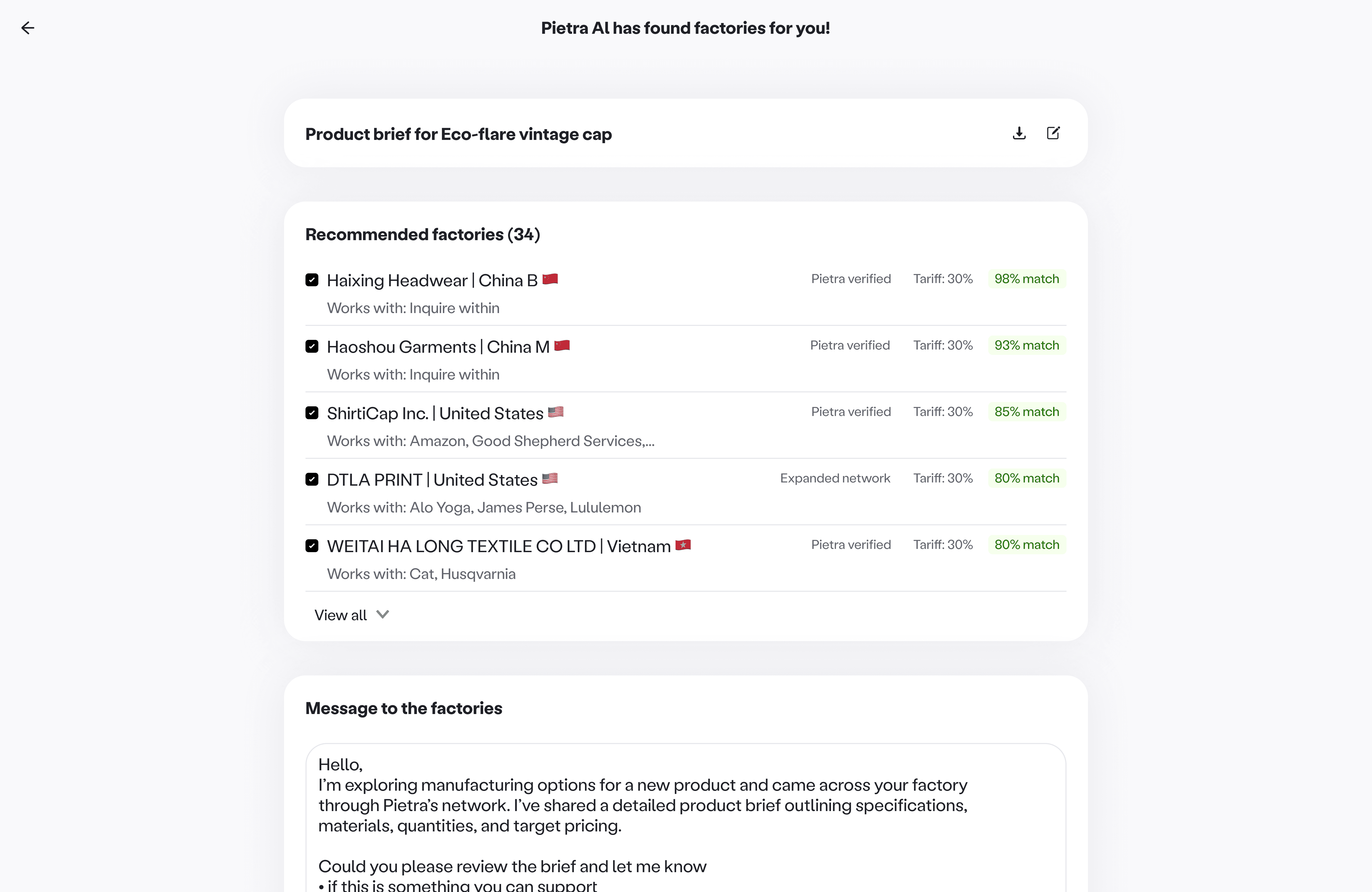

Factory matches grounded in the brief. The final step of the journey was designed to protect user effort by ensuring users reached out to the right factories, in the right way.

~80% of users who saw factory recs went on to contact suppliers. This validated that the final step removed hesitation at the point of outreach and translated preparation into action.

Step 3 - Factory recommendations

Hypothesis. If the brief feels close to complete, users are more likely to keep going, view factory matches, and reach out.

Constraints. Generating a brief takes ~ 1-2 mins, so value is delayed. Vague inputs force the agent to guess, which pushes more cleanup work back onto the user.

Goal. Encourage stronger input so the brief is closer to complete and users don't abandon the flow.

Iteration 1

Auto enhance on send. Leadership pushed for a lightweight way to strengthen input before brief generation. After a user hits send, the system takes their input and generates 2-3 improved prompt options that try to fill gaps quickly.

Problem. Auto enhance added a second wait before users saw value. Users waited ~30 secs for prompt options, then another 1-2 minutes for the brief. It felt heavy, interrupted momentum, and made the tool feel slow.

Auto enhance on send. Prototyped using claude.

Iteration 2

Better inputs through guidance, not a forced detour. We still needed stronger inputs, but without adding more waiting before the brief.

Prompt templates. Short, copyable examples that show what a strong request looks like. This helped users move past one word inputs we saw early on, like cotton, t shirt, or hats.

Prompt templates on input screen

Help me refine, optional. We kept enhancement, but made it user controlled. New founders could pull suggestions when they wanted them, experienced users could move forward without friction.

Optional enhanced input experience

Guided completion as a fail safe. To take this one step further, as a fail safe, the agent walks through fields one by one. It asks focused questions, suggests missing constraints, and makes it easy to confirm or edit, so users never feel stuck staring at a long document.

The agent guided users field by field to fill gaps and confirm edits

Why it worked. Users could start fast, reach the brief sooner, and still end up with a stronger, more supplier ready brief through optional guidance and guided refinement.

Starting point. The team aligned on making the product brief laid out in a canvas so it was more scannable than a wall of text.

Problem. Even in canvas form, the brief still behaved like static content. Every change turned into a loop of typing, waiting, rereading, and repeating. It felt slow and cumbersome.

Proposal and pushback. I proposed making the brief interactive so users could act on the artifact directly, instead of routing every micro decision through chat.

The pushback was fair. This required new interaction patterns and careful system state handling, and it was a product bet, so we needed proof of usage before committing to heavier engineering.

Iteration 1

Unblock refinement with minimal build. We shipped a lightweight first pass that made refinement possible without building full interactivity yet.

AI chat. The agent walked through fields one by one to help users complete the brief, so users could keep moving without needing to know what to answer next.

The agent guided users field by field to fill gaps and confirm edits

Brief canvas. Introduced Review this cues on fields where the agent made assumptions or needed user confirmation, so users could focus on the highest risk gaps first.

Review this cues highlighted fields that needed user confirmation, turning a static brief into a guided checklist.

Iteration 2, 3, …

Make the brief interactive. I collaborated closely with the AI and engineering leads to map what we could ship on day 1 versus day 30, then evolved the canvas into a workspace.

Inline actions. We added a field level menu with common questions we shaped with the sourcing team. Users could pick an option, ask from a preset list, or ask their own question, so decisions stayed fast and in context.

Field actions moved refinement into the brief

Lock and skip. In sandbox testing, we observed that the agent kept asking about fields users had already decided. Locking keeps confirmed fields fixed so the agent skips them, which removes repeat questions and keeps momentum.

Auto lock was applied to fields that are already explicit in the brief, and when a user confirms a choice.

Visualize for ambiguous details. The sourcing team pointed out that some details are hard to validate in text. We used our existing image generation capabilities to visualize options using the full brief context, helping users confirm details and make decisions faster.

Visualize brought the brief to life with context aware images, making it easier to align on details before outreach.

Why it worked. The brief stopped feeling like an output. It became a workspace users could scan, refine, and lock down until it was supplier ready.

A new challenge post launch. As AI started helping brands identify more relevant factories, users were often reaching out to a larger number of suppliers than before. That was a win for reach, but it also multiplied the work of managing conversations.

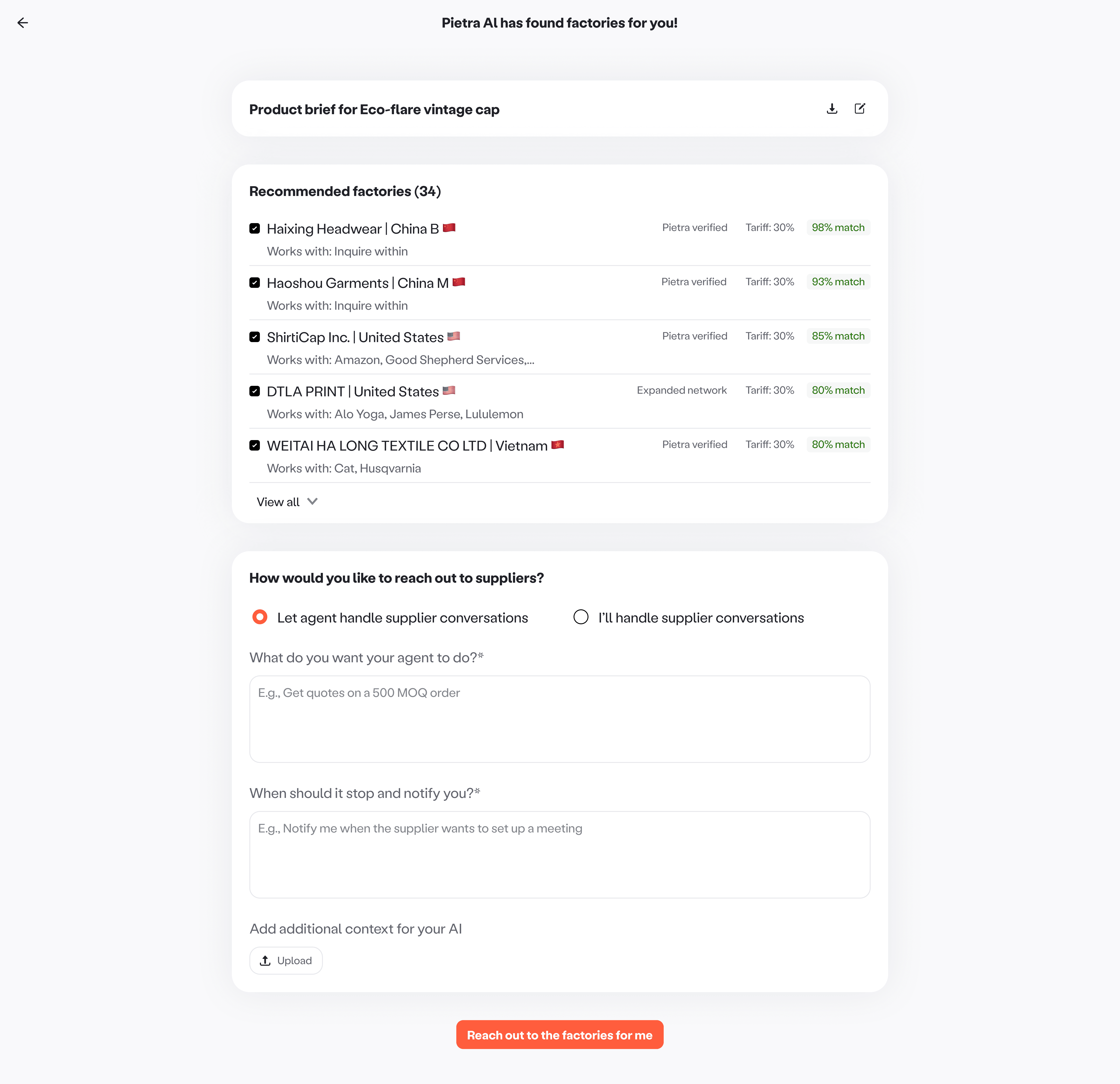

Goal. Help users scale outreach while staying focused on decisions instead of coordination.

Extending automation into messaging. Brands could keep conversations manual, or deploy an agent to handle outreach and follow ups.

~90% of users who turned on automated messaging kept it enabled. This indicated that goal-based automation reduced overhead without compromising trust or control.

Automating supplier outreach

Goal driven automation. Users set what information to collect and what to report back, keeping the agent focused on outcomes.

Goal based automation

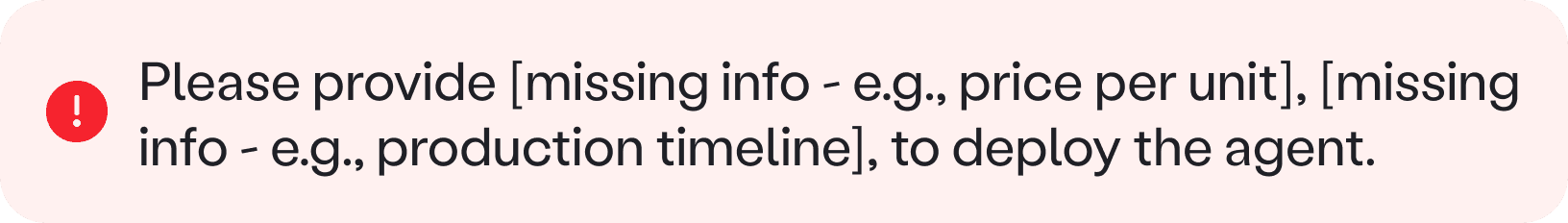

Guardrails and recovery. Before deployment, we validated the brief and goal, surfaced missing info clearly, and offered quick fixes. This ensured the agent entered conversations with enough context to be effective.

LLM-based information validation layer

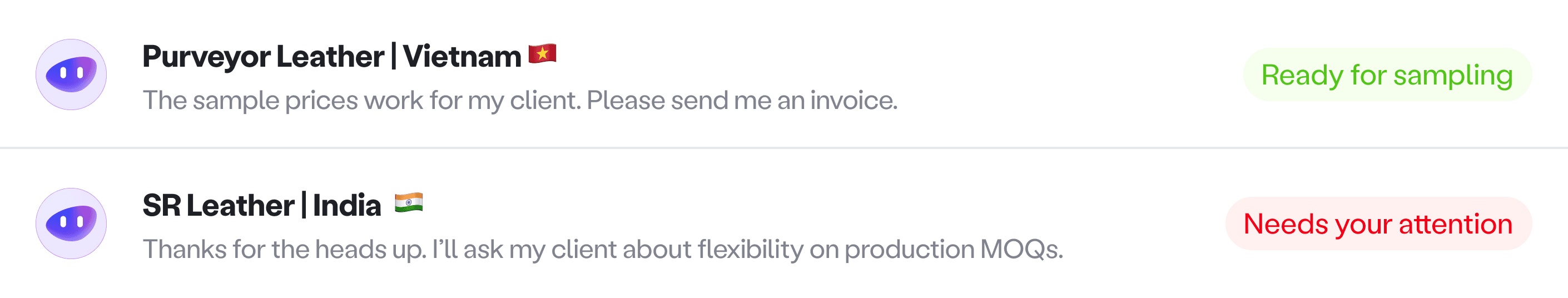

Scannable progress and clear control. We summarized threads, tagged them by outcome, and gave users simple controls to edit goals, stop, or take over. Notifications only fired when human input was needed.

Agent inbox

Sourcing AI was Pietra’s first successful AI-native workflow, proving that guided automation could meaningfully improve a complex, high-stakes process like sourcing. This integration marked the transition from an experimental AI feature to a foundational capability embedded in the product’s day-to-day workflows.

Pietra Copilot